Generating Image Captions in Indonesian Using a Deep Learning Approach Based on Vision Transformer and IndoBERT Architectures

Abstract

The primary objective of this research is to develop an image captioning system in Indonesian by leveraging deep learning architectures, specifically Vision Transformer (ViT) and IndoBERT. This study addresses the challenge of generating accurate and contextually relevant captions for images, which is a crucial task in the fields of computer vision and natural language processing. The main contribution of this research lies in integrating ViT for visual feature extraction and IndoBERT for linguistic representation to enhance the quality of image captions in Indonesian. This approach aims to overcome limitations in existing models by improving semantic understanding and contextual relevance in generated captions. The methodology involves data preprocessing, model training, and evaluation using the Flickr8k dataset, which was translated into Indonesian. The research employs various data augmentation techniques to enhance model performance. The model is trained on a combined architecture where ViT extracts visual features and IndoBERT processes textual information. The experimental procedures include training the model on the Indonesian-translated Flickr8k dataset and evaluating its performance using BLEU and METEOR scores. The training loss and validation loss graphs provide insights into the model’s learning process. The results indicate that the proposed model outperforms traditional CNN+LSTM and Transformer-based models in terms of BLEU and METEOR scores. A detailed analysis of these results highlights the advantages of using ViT and IndoBERT for this task. The findings of this research have significant implications for real-world applications, such as automatic image captioning for visually impaired users, content tagging for multimedia platforms, and improvements in machine translation. Future research can explore the integration of human evaluation metrics and the use of larger datasets to enhance generalizability.

Article Metrics

Abstract: 128 Viewers PDF: 118 ViewersKeywords

Computer Vision; Deep Learning; Image Captioning; indoBERT; Vision Transformer;

Full Text:

PDF

DOI:

https://doi.org/10.47738/jads.v6i2.672

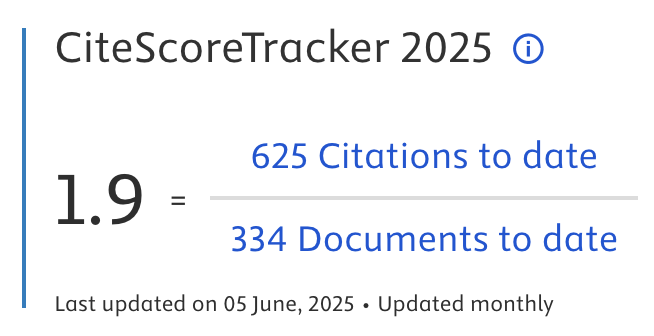

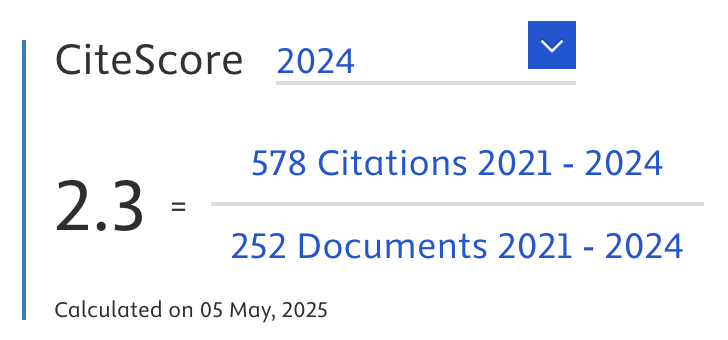

Citation Analysis:

Refbacks

- There are currently no refbacks.

Journal of Applied Data Sciences

| ISSN | : | 2723-6471 (Online) |

| Collaborated with | : | Computer Science and Systems Information Technology, King Abdulaziz University, Kingdom of Saudi Arabia. |

| Publisher | : | Bright Publisher |

| Website | : | http://bright-journal.org/JADS |

| : | taqwa@amikompurwokerto.ac.id (principal contact) | |

| support@bright-journal.org (technical issues) |

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

.png)