Improving Evaluation Metrics for Text Summarization: A Comparative Study and Proposal of a Novel Metric

Abstract

This research evaluates and compares the effectiveness of various evaluation metrics in text summarization, focusing on the development of a new metric that holistically measures summary quality. Commonly used metrics, including ROUGE, BLEU, METEOR, and BERTScore, were tested on three datasets: CNN/DailyMail, XSum, and PubMed. The analysis revealed that while ROUGE achieved an average score of 0.65, it struggled to capture semantic nuances, particularly for abstractive summarization models. In contrast, BERTScore, which incorporates semantic representation, performed better with an average score of 0.75. To address these limitations, we developed the Proposed Metric, which combines semantic similarity, n-gram overlap, and sentence fluency. The Proposed Metric achieved an average score of 0.78 across datasets, surpassing conventional metrics by providing more accurate assessments of summary quality. This research contributes a novel approach to text summarization evaluation by integrating semantic and structural aspects into a single metric. The findings highlight the Proposed Metric's ability to capture contextual coherence and semantic alignment, making it suitable for real-world applications such as news summarization and medical research. These results emphasize the importance of developing holistic metrics for better evaluation of text summarization models.

Article Metrics

Abstract: 158 Viewers PDF: 168 ViewersKeywords

Full Text:

PDFRefbacks

- There are currently no refbacks.

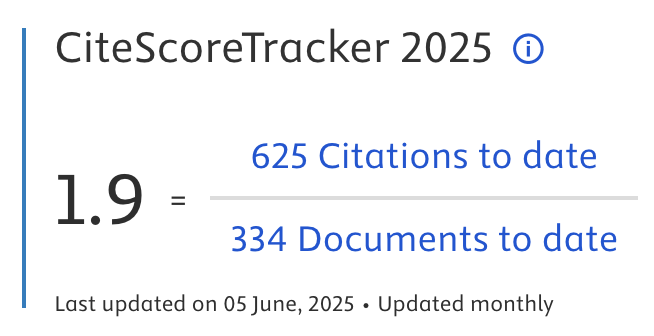

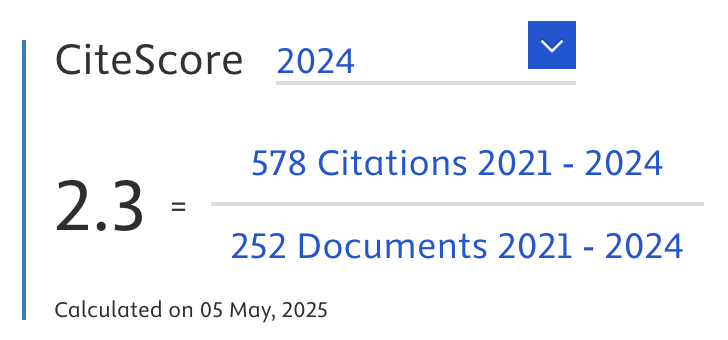

Journal of Applied Data Sciences

| ISSN | : | 2723-6471 (Online) |

| Collaborated with | : | Computer Science and Systems Information Technology, King Abdulaziz University, Kingdom of Saudi Arabia. |

| Publisher | : | Bright Publisher |

| Website | : | http://bright-journal.org/JADS |

| : | taqwa@amikompurwokerto.ac.id (principal contact) | |

| support@bright-journal.org (technical issues) |

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

.png)